Anypoint Connector for ServiceNow (ServiceNow Connector) provides connections between Mule runtime engine (Mule) and ServiceNow apps. Use the ServiceNow operations with the custom ServiceNow tables, along with any operations available through the installed plugins.

Prerequisites

To use this connector, you must be familiar with:

- Anypoint Connectors

- Mule runtime engine (Mule)

- Elements in a Mule flow and global elements

- How to create a Mule app using Design Center or Anypoint Studio

Before creating an app, you must have access to the ServiceNow target resource and Anypoint Platform.

Audience

- Starting userTo create your Mule app,.

- Power userRead the XML and Maven Support and Examples topics. The Examples topic provides one or more use cases for using the connector.

Common Use Cases For the Connector

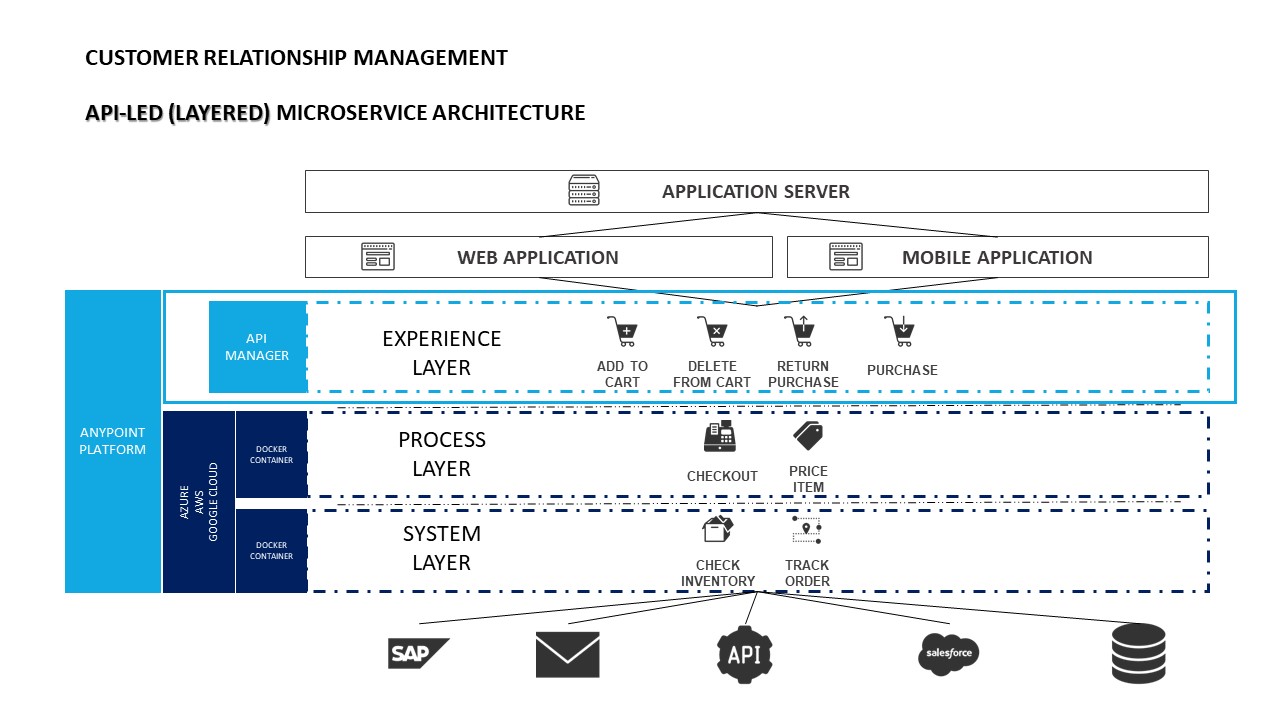

ServiceNow Connector enables organizations to fully integrate business processes across HR, legal, procurement, operations, marketing, and facilities departments. Creating connectivity within and outside the enterprise is quick and simple with connectivity to over 120 Anypoint connectors.

Use ServiceNow Connector to create instant API connectivity with the ServiceNow API, and quickly and easily interface with ServiceNow from within Anypoint Platform.

Example use cases includes getting a ServiceNow Training incident record.

Connection Types

The following authentication types are supported:

- Basic

- OAuth 2.0 Authorization Code

Limitations

- OAuth 2.0 Authorization Code authentication works only with the following versions of Mule:

- 4.1.5

- 4.2.1 and later

- Metadata does not work with OAuth 2.0 Authorization Code authentication.

Use Exchange Templates and Examples

Anypoint Exchange provides templates you can use as a starting point for your app, as well as examples that illustrate a complete solution.

Next Step

After you complete the prerequisites and experiment with templates and examples, you are ready to create an app with Anypoint Studio.

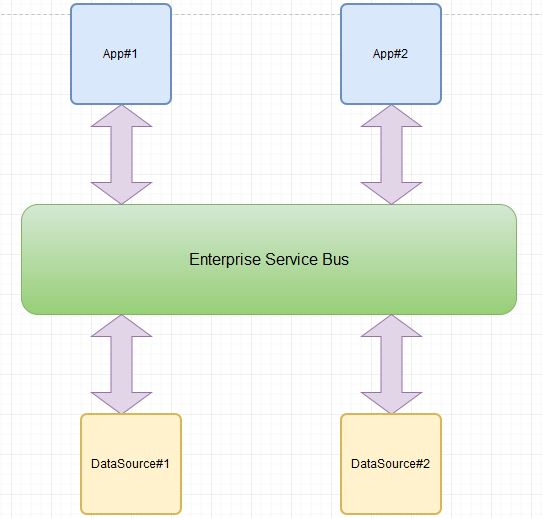

Introduction to Anypoint Connectors

Anypoint Connectors are reusable extensions to Mule runtime engine (Mule) that enable you to integrate a Mule app with third-party APIs, databases, and standard integration protocols. Connectors abstract the technical details involved with connecting to a target system. All connectivity in Mule 4 is provided through connectors.

Using connectors in a Mule app provides the following advantages:

- Reduces code complexity, because you can connect a Mule app to a target system without knowing all of the details required to program to the target system

- Simplifies authenticating against the target system

- Proactively infers metadata for the target system, which makes it easier to identify and transform data with DataWeave

- Makes code maintenance easier because:

- Not all changes in the target system require changes to the app.

- The connector configuration can be updated without requiring updates to other parts of the app.

Enable Connectivity with Connectors

Use connectors to connect a Mule app to specific software applications, databases, and protocols. To see a list of the MuleSoft-built connectors in Mule 4.

Connect to Software Applications

You can use connectors to connect a Mule app to specific software applications and to perform actions on the connected application.

For example, you can use Anypoint Connector for SAP (SAP Connector) to automate sales order processing between SAP ERP and your customer relationship management (CRM) software.

Likewise, you can use Anypoint Connector for Salesforce (Salesforce Connector) to integrate Salesforce with other business applications, such as ERP, analytics, and data warehouse systems. Learn more from Servicenow Certification

Connect to Databases

You can use connectors to connect a Mule app to one or more databases and to perform actions on the connected database.

For example, you can use Anypoint Connector for Databases (Database Connector) to connect a Mule app to any relational database engine. Then you can perform SQL queries on that database.

Likewise, you can use Anypoint Connector for Hadoop Distributed File System (HDFS Connector) to connect a Mule app to a Hadoop Distributed File System (HDFS). Then you can integrate databases such as MongoDB with Hadoop file systems to read, write, receive files, and send files on the HDFS server.

Connect to Protocols

You can use connectors to send and receive data over protocols and, for some protocol connectors, to perform protocol operations.

For example, you can use Anypoint Connector for LDAP (LDAP Connector) to connect to an LDAP server and access Active Directory. Then you can add user accounts, delete user accounts, or retrieve user attributes, such as the user’s email or phone number.

Likewise, you can use Anypoint Connector for WebSockets (WebSockets Connector) to establish a WebSocket for bidirectional and full-duplex communication between a server and client, and to implement server push notifications.

How Connectors Work

Connectors can perform one or more functions in an app, depending on where you place them and the capabilities of the specific connector. A connector can act as:

- An inbound endpoint that starts the app flowConnectors that have input sources can perform this function.

- A message processor that performs operations in the middle of a flow

- An outbound endpoint that receives the final payload data from the flow

Input Sources

Some connectors have input sources, or “triggers”. These components enable a connector to start a Mule flow by receiving information from the connector’s resource. For example, when a Salesforce user updates a sales opportunity, a flow that starts with a Salesforce Connector component can receive the information and direct it to a database connector for processing.

To see if a connector can act as an input source, see the Reference Guide for the connector.

App developers can also use an HTTP Listener or Scheduler as an input source for a flow:

- HTTP Listener is a connector that listens to HTTP requests.You can configure an HTTP Listener to start a flow when it receives specified requests.

- Scheduler is a core component that starts a flow when a time-based condition is met.You can configure a Scheduler to start a flow at regular intervals, or you can specify a more flexible cron expression to start the flow.

Operations

Most connectors have operations that execute API calls or other actions on their associated system, database, or protocol. For example, you can use Anypoint Connector for Workday (Workday Connector) to create a position request in Workday or add a fund to the financial management service. Likewise, you can use Anypoint Connector for VMs (VM Connector) to consume messages from and publish messages to an asynchronous queue.

To see a list of operations for a connector, see the Reference Guide for that connector.

Access Connectors and Related Assets

Anypoint Exchange provides access to all publicly available connector assets including connectors, templates, and examples.

Connectors in Exchange

You can use Exchange as a starting point for discovering all or a subset of MuleSoft-built connectors.

To get in-depth knowledge, enroll for a live free demo on Servicenow Online Training