You can manage projects using both Microsoft Project and the ServiceNow Project Management application.

Users with it_project_manager role can export projects and project tasks to Microsoft Project where they can be managed separately and then imported back into the instance as needed. You can import projects created in Microsoft Project 2003, 2007, 2010, 2013, or 2016 into the Project Management application.

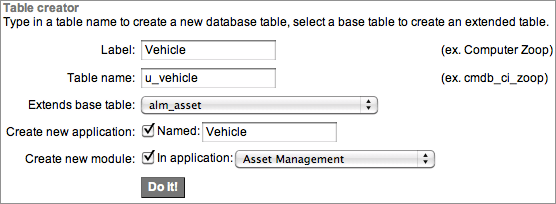

If you import a Microsoft project into the instance as a new project, a new record is created in the Project [pm_project] table, and tasks associated with the project are added to the Project Task [pm_project_task] table.

Only the fields that are common or mapped between the applications are imported. Imported projects are brought into the instance with both Priority and Risk set to Low.

If you import a Microsoft project into an existing project, the instance checks the Text10 field in the top-level Microsoft Project task.

If the Text10 field contains a recognizable sys_id, the project was previously exported from the instance. In this case, the values from the project overwrite the values for the project. Learn more skills from Servicenow Training

When you import a project into the instance, project constraints that are not supported are converted as follows:

- Time constraints: The Project Management application sets the time constraint for all imported tasks to Start on specific date irrespective of their time constraint in Microsoft Project.

Note: The resource name in Microsoft Project should map to the name of the user in the instance.

The following calendar elements from Microsoft Project are not imported into pProject Management:

- Project calendars

- User calendars

- Schedules

The imported project uses the default schedule of a Monday to Friday workday from 8 A.M. to 5 P.M. with an hour break for lunch.

Support versions

- Microsoft Project 2003

- Microsoft Project 2007

- Microsoft Project 2010

- Microsoft Project 2013

- Microsoft Project 2016

Project export to Microsoft Project:

If you are using Microsoft Project to manage project activities, you can export a project to XML format and import it into Microsoft Project.

Users with the project manager role can export a project using:

- The Export Project module

- The Project form

Project managers can also export project tasks using the Project Task form.

Create custom field mapping for Microsoft Project file import

Map custom fields from Microsoft Project to ServiceNow fields before importing a project.

Before you begin

Create custom fields in your ServiceNowinstance before mapping them with Microsoft Project.

About this task

Map the custom fields that you create in your ServiceNow instance with custom fields in the Microsoft Project file you plan to import.

The supported data types for field mapping between Microsoft Project and ServiceNow instances are:

- True/False

- Calendar

- Date/Time

- Choice

- Color

- Currency

- Decimal

- Due Date

- Floating Point Number

- Date

- Date/Time

- Duration

- List

- Time

- HTML

- Integer

- Long

- Percent Complete

- Phone Number (E164)

- Reference

- Name -Value Pairs

- String

- Translated HTML

- Translated Text

- URL

Procedure

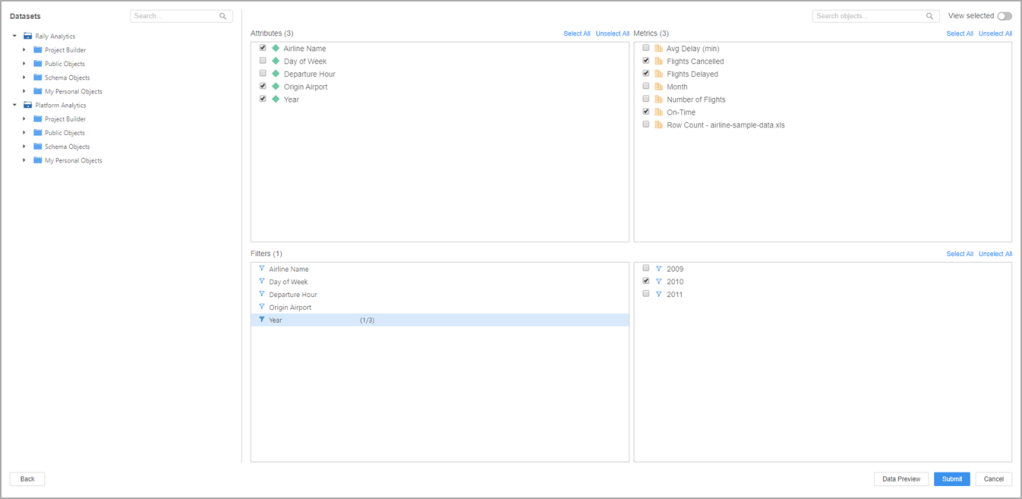

- Navigate to Project Administration > Project – MSP Import Field Mappings.

- Click New.

- From the Table list, select the table in which you created the custom field.

- In the Microsoft Project Column field, enter the name of the custom field in your Microsoft Project file that you want to map.

- In the Destination Column list, select the custom ServiceNow field that you want to map to the Microsoft Project field while importing a project.

- Click Submit. For more info Servicenow Developer Training

What to do next

- Import the Microsoft Project file. For more information

- Configure the Project form to add the custom fields that you want to see.

Import a Microsoft Project file:

You can import Microsoft project files into the Project Management application.

Before you begin

Role required: it_project_manager

About this task

Before importing a Microsoft Project file into the ServiceNow instance, consider the following information.

- Microsoft Project project imported into a teamspace is only available to users who can access the teamspace.

- To import custom fields in your Microsoft project, create those custom fields in your ServiceNow instance first, and then create mapping between these fields before importing the project.

- You can also use the Scripted Extension Points for importing custom fields without creating and mapping the custom fields manually. Use the MSProjectImportTaskFormatter Extension Point to create a script include and map custom fields in Microsoft Project and ServiceNow. You can also use this Extension Point to modify the data while importing a project.

- Recalculation does not happen on project tasks when they are imported from the Microsoft Project file. Once the project is in the ServiceNow system, it would be treated as a manual project.

- Importing a Microsoft Project project with inter-project dependencies, does not import the shadow tasks.

- Only the subprojects get imported into the ServiceNow instance, the subproject tasks are not imported.

- While importing a Microsoft Project file into ServiceNow, the import fails:

- If the project with tasks was created in ServiceNow instance before the import.

- If you create tasks in a project in ServiceNow instance which was imported from Microsoft Project file earlier, and then reimport.

Note: To retain the project tasks that were created in the ServiceNow instance, you must export that project first into the Microsoft Project file. Then, reimport the file back into instance instance.

- If the task being deleted due to import has any of the related entities: Cost plan, Benefit plan, Resource plan, Time card, or Expense lines.

- If the values for lag or lead time dependencies are not in the supported format.

- Positive lag time dependency values for days, hours, and minutes are allowed. Negative lag time dependencies are allowed only for days.

- All other elapsed duration types from Microsoft Project such as emin, eday, eweek, emon, eyr, or % are not allowed for import.

- If the values for lag or lead time dependencies are not in the supported format.

Procedure

- Navigate to Project > Projects > Import.

- Click Choose File to select a Microsoft Project file.

- To import the Microsoft project as a new project, select the Create new project option.

- To import the Microsoft project as a subset of an active, existing project or task:

- Select Update and existing project.

- Click the reference lookup icon (

) and select a project or task. Only active projects appear in the list.

- Click Import. For more info Servicenow Certification

Result

- A project task that was imported in ServiceNow instance earlier and has associated time cards, resource plans, cost plan, benefit plan, or expense lines is retained on reimport even if it is deleted from Microsoft Project.

- Dates in the ServiceNow project remain same as the dates in the Microsoft Project file.

- In a ServiceNow project with subprojects, the following details change:

- The WBS order of imported tasks is regenerated after import.

- The Planned Start Date and Planned End Date of the parent project are rolled up.

- The State of the parent project and tasks are rolled up.

- The % Complete on the top task is rolled up.

Export project tasks

The task being exported must be associated with a project that uses either the Project Management Schedule or the Default MS Project schedule.

Before you begin

Role required: it_project_manager

Procedure

- Navigate to Project > Projects > All.

- Open the project.

- Scroll to the Project Tasks related list and click a task number to open the Project Task form.

- Right-click the form header and select Export Task to MS Project from the context menu.

The task is exported to a folder on your system.

- Open Microsoft Project to import the exported project task files. Refer to Microsoft product documentation for instructions.

Calendars and schedules: Limitations

Some calendar elements from Microsoft Project are not imported into the Project Management application.

- Project calendars

- User calendars

- Schedules

The imported project uses the default schedule of a Monday to Friday workday from 8 A.M. to 5 P.M. with an hour break for lunch, starting with the v3 application.

Using the export project module

The Export Project Module exports a project to XML format.

Before you begin

Role required: it_project_manager

About this task

ServiceNow projects must use either the Project Management Schedule or the Default MS Project schedule before they can be exported.

Procedure

- Navigate to Project > Administration > Export Project.

- Select a project in the Project to export field.

- Click Export to export the project to a folder on your system.

- Open Microsoft Project to import the exported project files. Refer to Microsoft product documentation for instructions.

To get in-depth knowledge, enroll for a live free demo on Servicenow Online Training